Introduction

ChatGPT released by OpenAI is a versatile Natural Language Processing (NLP) system that comprehends the conversation context to provide relevant responses. Although little is known about construction of this model, it has become popular due to its quality in solving natural language tasks. It supports various languages, including English, Spanish, French, and German. One of the advantages of this model is that it can generate answers in diverse styles: formal, informal, and humorous.

The base model behind ChatGPT only has 3.5B parameters, yet it provides answers better than the GPT3 model that has 175B parameters. This highlights the importance of collecting human data for supervised model fine-tuning. ChatGPT has been evaluated on well-known natural language processing tasks, and this article will compare its performance with supervised transformer-based models from the DeepPavlov Library on question answering tasks. For this article, I have prepared a Google Colab notebook for you to try using models from the DeepPavlov Library for some QA tasks.

Intro to Question Answering

Question answering is a natural language processing task used in various domains (e.g. customer service, education, healthcare), where the goal is to provide an appropriate answer to questions asked in natural language. There are several types of Question Answering tasks, such as factoid QA, where the answer is a short fact or a piece of information, and non-factoid QA, where the answer is an opinion or a longer explanation. Question Answering has been an active research area in NLP for many years so there are several datasets that have been created for evaluating QA systems. Here are some datasets for QA that we will look at in more detail in this article: SQuAD, BoolQ, and QNLI.

When developing effective QA systems, several problems arise, such as handling ambiguity and variability of natural language, dealing with large amounts of text and understanding complex language structures and contexts. Advances in deep learning and other NLP techniques have helped solve some of these challenges and have led to significant improvements in performance of QA systems in recent years.

The DeepPavlov Library uses BERT base models to deal with Question Answering, such as RoBERTa. BERT is a pre-trained transformer-based deep learning model for natural language processing that achieved state-of-the-art results across a wide array of natural language processing tasks when this model was proposed. There are also loads of models with the BERT-like architecture that are trained to deal with tasks in specific languages besides English: Chinese, German, French, Italian, Spanish, and many others. It can be used to solve a wide variety of language problems, while changing only one layer in the original model.

Intro to DeepPavlov Library

The DeepPavlov Library is a free, open-source NLP framework that includes state-of-the-art NLP models that can be utilized alone or as part of the DeepPavlov Dream. This Multi-Skill AI Assistant Platform offers various text classification models for intent recognition, topic classification, and insult identification.

PyTorch is the underlying machine learning framework that DeepPavlov framework employs. The DeepPavlov Library is implemented in Python and supports Python versions 3.6–3.9. Also the library supports the use of transformer-based models from the Hugging Face Hub through Hugging Face Transformers. Interaction with the models is possible either via the command-line interface (CLI), the application programming interface (API), or through Python pipelines. Please note that specific models -- may have additional installation requirements.

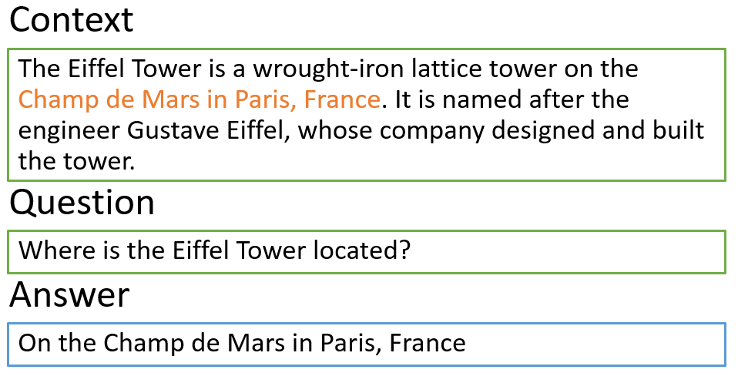

Reading Comprehension (SQuAD 2.0)

Let’s start our comparison with the reading comprehension task, more specifically, SQuAD 2.0 dataset. This is a large-scale, open-domain question answering dataset that contains over 100,000 questions with answers based on a given passage of text. Unlike the original SQuAD dataset, SQuAD 2.0 includes questions that cannot be answered solely based on the provided passage, which makes it more challenging for machine learning models to answer accurately.

Despite the fact that the first version of SQuAD was released back in 2016 and that it contained answers to questions about Wikipedia articles, the QA in the SQuAD statement is still relevant. After training the model on this dataset, it’s possible to provide information not only from Wikipedia as context, but also information from official documents like contracts, company reports, etc. Thus, it is possible to extract facts from a broad range of texts not known during training.

Let’s now move on to composing a question for ChatGPT. To make the answers more stable, I restarted the session after each question. So, the following prompt was used to get answers on examples in SQuAD-style:

Please answer the given question based on the context. If there is no answer in the context, reply NONE.

context: ['context']

question: ['question']

Let's now look at and compare the results. Even though ChatGPT is exceptionally good at answering questions, experiments on one thousand sampled examples showed that ChatGPT lags far behind existing solutions when answering questions about a given context. If we carefully consider the examples on which the model is wrong, it turns out that ChatGPT doesn’t cope well with answering a question by context when the answer may not actually be in the presented context; also, it does not perform well when questioned about the order of events in time. The DeepPavlov library, on the other hand, provides the correct output in all these cases. Few examples are following:

Q: Please answer the given question based on the context. If there is no answer in the context, reply NONE.

context: The service started on 1 September 1993 based on the idea from the then chief executive officer, Sam Chisholm and Rupert Murdoch, of converting the company business strategy to an entirely fee-based concept. The new package included four channels formerly available free-to-air, broadcasting on Astra's satellites, as well as introducing new channels. The service continued until the closure of BSkyB's analogue service on 27 September 2001, due to the launch and expansion of the Sky Digital platform. Some of the channels did broadcast either in the clear or soft encrypted (whereby a Videocrypt decoder was required to decode, without a subscription card) prior to their addition to the Sky Multichannels package. Within two months of the launch, BSkyB gained 400,000 new subscribers, with the majority taking at least one premium channel as well, which helped BSkyB reach 400 million households by mid-1994. Michael Grade criticized the operations in front of the Select Committee on National Heritage, mainly for the lack of original programming on many of the new channels.

question: When did BSkyB gain 400 subscribers?

ChatGPT: BSkyB gained 400,000 new subscribers within two months of the launch.

Expected: NONE

DeepPavlov: NONE

Here we see that ChatGPT doesn’t always work well with numeric values. This may be due to the fact that in this case the model is trying to get an answer from the context, although in fact there is no answer.

Q: Please answer the given question based on the context. If there is no answer in the context, reply NONE.

context: A series of civil unrest incidents began in France on 19 January 2023, organised by opponents of the pension reform bill proposed by the Borne government, which would increase the retirement age from 62 to 64 years old. The strikes have led to widespread disruption, including garbage piling up in the streets and public transport cancellations. In March, the government used Article 49.3 of the Constitution to force the bill through the French Parliament, sparking more protests and two failed no confidence votes, contributing to an increase in violence in protests alongside the union-organised strike action. In 2023, the popularity rating of French president Macron is only 25%, although in 2021-2022 it was 50%.

question: Who was the French president in 2022?

ChatGPT: The French president in 2022 is not mentioned in the given context. Therefore, the answer is NONE.

Expected: Macron

DeepPavlov: Macron

In the example above, I specifically took a context that has enough information to draw the right conclusion but that ChatGPT definitely didn’t come across during training (so the model didn’t know who was the president of France in 2022 and had to rely solely on the given context).

Q: Please answer the given question based on the context. If there is no answer in the context, reply NONE.

context: Company X releases new models every year on the 1st of September. K-3 model was released in 2003. K-1 model that was released in 2001 and K-2 model released in 2002 are the only models before the release of K-3 model.

question: What is the name of the model released one year before the release of K-2 model?

ChatGPT: NONE.

Expected: K-1

DeepPavlov: K-1

This is another example that ChatGPT hasn’t seen. And as we can see, here it also cannot answer the question about the order of events in time.

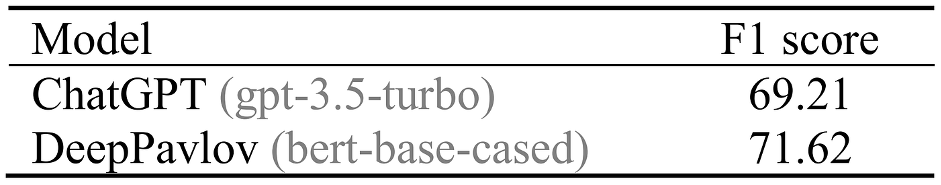

For quantitative comparison of ChatGPT and DeepPavlov SQuAD 2.0 pretrained model I used the sampled set from the paper. Although the model from the DeepPavlov library shows better results than ChatGPT, it isn’t much ahead on the tested sample. This may be due to the mistakes in the layout of the dataset. If it is corrected, the results of both models are expected to improve.

Natural Yes/No Questions (BoolQ)

DeepPavlov Library also contains a model that has been trained on BoolQ dataset to answer natural questions in the yes/no format. BoolQ is a machine learning dataset containing over 150,000 yes/no questions, created by Google Research to test the ability of natural language processing algorithms to accurately answer binary questions based on a given text passage.

Let's see how to get responses from ChatGPT using BoolQ examples. One can use the prompt from the article:

Please answer the given question based on the context. The answer should be exact 'yes' or 'no'

context: ['context']

question: ['question']

As you can see, the prompt is simple and shouldn’t confuse the model. Some experiments have already been carried out and showed that the accuracy of ChatGPT on the BoolQ dataset (reading comprehension) is 86.8. However, the best model achieves 91.2. While the difference between the compared models isn’t too big, here some examples where ChatGPT lags behind the model from the DeepPavlov Library:

Q: Please answer the given question based on the context. The answer should be exact 'yes' or 'no'.

context: Cloak – Ladies may wear a long cloak called a short cape, or a full-length cloak. Gentlemen wear an ankle-length or full-length cloak. Formal cloaks often have expensive, colored linings and trimmings such as silk, satin, velvet and fur.

question: Are a short cape and a long cloak the same?

ChatGPT: No.

Expected: Yes

DeepPavlov: Yes

Despite the fact that in the given example it’s written that the long cloak is called a short cape – ChatGPT couldn’t generalize this information, from which it follows that it is the same thing.

Q: Please answer the given question based on the context. The answer should be exact 'yes' or 'no'.

context: The SpaceX Starship orbital test flight on April 20, 2023, was the first launch of SpaceX's Starship spacecraft atop the Super Heavy first-stage booster. A sequel to suborbital tests of the spacecraft, the uncrewed flight ended four minutes after liftoff when the vehicle began to tumble and was destroyed. The combined vehicle was the tallest and most powerful rocket ever flown, with twice the thrust of the Saturn V super heavy-lift launch vehicle developed in the 1960s.

question: Is the Saturn V the tallest rocket ever flying?

ChatGPT: Yes.

Expected: No

DeepPavlov: No

Based on the given example, I can assume that the model relies on its internal knowledge (which is limited to 2021) and doesn’t pay attention to the context at all.

Q: Please answer the given question based on the context. The answer should be exact 'yes' or 'no'.

context: The collision between the two trains occurred at 11:30 pm on the Athens-Thessaloniki mainline, which is operated by OSE, the Greek national railway company. The section where the accident took place, located 27.3 kilometres (17.0 miles) north of Larissa, was double-tracked and equipped with automatic controls, but switching and signalling were still being controlled manually. The passenger train and the freight train collided head-on halfway between the municipalities of Tempi and Evangelismos just before midnight, as the passenger train was exiting the tunnel under the E75 roadway that crossed over the tracks.

question: Did the collision occur at half past 11 in the evening?

ChatGPT: No.

Expected: Yes

DeepPavlov: Yes

It's strange, but ChatGPT sometimes can't match a numeric time value with a verbal one. An example was also taken from the “future”, which is unknown to ChatGPT.

In all the above examples, the model from the DeepPavlov Library responds correctly. Just like in the examples for SQUAD on the BoolQ dataset, ChatGPT makes mistakes when it needs to answer about time and when it needs to generalize some information.

Also, I noticed that ChatGPT relies heavily on information that was memorized during training. So, if we take a real fact as a context, but add false information to it, then ChatGPT mostly will give an answer using real information instead of fictional. Examples:

Q: Please answer the given question based on the context. The answer should be exact 'yes' or 'no'.

context: David Robinson (basketball player) -- Robinson is a 10-time NBA All-Star, the 1995 NBA MVP, a two-time NBA Champion (1999 and 2003) and a two-time Olympic Gold Medal winner (1992, 1996). He is widely considered one of the greatest centers in both college basketball and NBA history. To date, Robinson is the only player from the Naval Academy to play in the NBA.

question: Does David Robinson play basketball?

ChatGPT: No.

Expected: Yes

DeepPavlov: Yes

If you ask ChatGPT to explain the answer to the question asked, it will reply that David Robinson has retired and no longer plays basketball. But this doesn’t follow from the context in any way.

Q: Please answer the given question based on the context. The answer should be exact 'yes' or 'no'.

context: Sweet potato -- The sweet potato (Ipomoea batatas) is a dicotyledonous plant. Its large, starchy, sweet-tasting, tuberous roots are a root vegetable. The young leaves and shoots are sometimes eaten as greens. The sweet potato and potato (Solanum tuberosum) belong to the nightshade family.

question: are potatoes and sweet potatoes from the same family?

ChatGPT: No.

Expected: Yes

DeepPavlov: Yes

In the original text, it’s indeed said that sweet potato and potato don’t belong to the same family. But again, in the given context it’s clearly written that they belong to the same family – nightshade, which was completely ignored by ChatGPT.

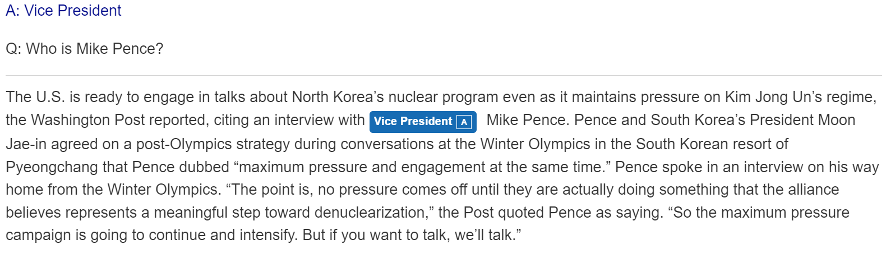

Question-answering NLI (QNLI)

The last QA task on which I compared the results of the DeepPavlov Library and ChatGPT is question answering entailment or QNLI dataset. QNLI (Question Natural Language Inference) is a natural language understanding (NLU) task where a model is trained to determine the relationship between two sentences: the context sentence (question) and the hypothesis sentence (answer). The objective is to assess whether the hypothesis sentence is entailment or not, given the context sentence.

To solve the QNLI problem using ChatGPT, the following prompt from the article was used:

Given the question: "['question']"

Determine if the following sentence contains the corresponding answer: "['sentence']"

Most of the time, ChatGPT manages to choose the correct answer. But I was able to confirm the results of the experiments: ChatGPT responds much worse than BERT-based models on the QNLI task. I didn’t manage to find new classes of ChatGPT model mistakes—basically, these all fall under the same classes of temporary mistakes (mistakes related to the inability to reason about events and their order in time) and logical mistakes (mistakes related to the inability to do deductive or inductive reasoning).

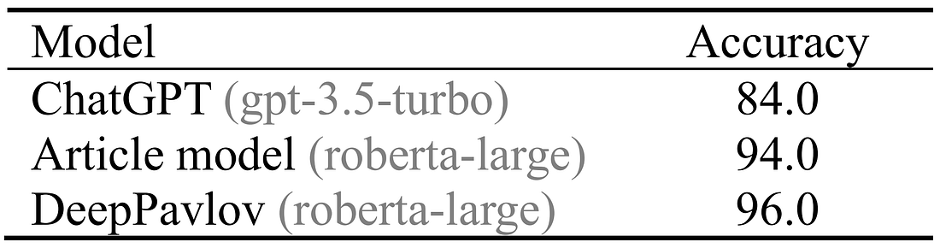

Also, I evaluated the DeepPavlov model on the sample from the dev set as in paper. The results are as follows. The pretrained QNLI models in DeepPavlov outperforms ChatGPT and the models from the article.

A few examples of mistakes:

Q: Given the question: "What is the minimum required if you want to teach in Canada?"

Determine if the following sentence contains the corresponding answer: "In most provinces a second Bachelor's Degree such as a Bachelor of Education is required to become a qualified teacher."

ChatGPT: Yes, the following sentence contains the corresponding answer to the given question.

Expected: No (not_entailment)

DeepPavlov: not_entailment

At first sight, even a person may think that the above sentence contains an answer to the question. But the key word in the question is “minimum”—with nothing about it in the sentence.

Q: Given the question: "What day of the week did Shark Tank debut on?"

Determine if the following sentence contains the corresponding answer: "Shark Tank (based on the Dragon's Den reality format) also became a midseason sleeper hit on Sundays in the spring of 2010; the following season, it became the tentpole of the network's Friday night schedule, gradually helping make ABC a strong competitor (after being paired with 20/20 and beginning with the 2012–13 season, the Tim Allen sitcom Last Man Standing) against CBS' long-dominant drama/reality lineup on that night for the first time since the 'TGIF' lineup ended in 2000."

ChatGPT: No, the sentence doesn't provide the answer to the given question.

Expected: Yes (entailment)

DeepPavlov: entailment

In the above example, we can again notice that ChatGPT can be easily deceived. The sentence from the example doesn’t explicitly indicate which day of the week the debut took place on, but implicitly it can be concluded that it was on Sunday.

Q: Given the question: "What thesis specifies that a polynomial relationship exists within time complexities in a computational model?"

Determine if the following sentence contains the corresponding answer: "This forms the basis for the complexity class P, which is the set of decision problems solvable by a deterministic Turing machine within polynomial time."

ChatGPT: Yes, the provided sentence contains the corresponding answer.

Expected: No (not_entailment)

DeepPavlov: not_entailment

Again, if you aren't familiar with the information given in the question, you cannot say that the answer is given in the provided sentence. Perhaps ChatGPT once again used its internal knowledge to solve the task. In general, ChatGPT seems to have limitations in terms of logical reasoning and contextual understanding. As a result, it may struggle with questions that are relatively simple for humans.

In addition to the problems outlined above, ChatGPT has other drawbacks. The responses generated by ChatGPT can be inconsistent and, at times, contradictory. The model’s answers may vary when asked the same question, and its performance may also be influenced by the order in which the questions are asked.

How to use DeepPavlov Library for QA?

To get started with the DeepPavlov Library, first you need to install it using the following command:

pip install deeppavlovAfter that, you can interact with the models. Here is the code that allows you to use the pre-trained model on SQuAD 2.0 from DeepPavlov, which answers questions about the passage:

from deeppavlov import build_model

model = build_model('', download=True, install=True)

model(["Company X releases new models every year on the 1st of September. K-3 model was released in 2003. K-1 model that was released in 2001 and K-2 model released in 2002 are the only models before the release of K-3 model."], ["What is the name of the model released one year before the release of K-2 model?"])

# [['GPT-5'], [28], [0.99]]If you want to use the BoolQ model, first you need to run the build_model command. Afterwards, it is enough to call the model:

from deeppavlov import build_model

model = build_model('superglue_boolq_roberta_mnli', download=True, install=True)

model(["Are a short cape and a long cloak the same?"],["Cloak – Ladies may wear a long cloak called a short cape, or a full-length cloak. Gentlemen wear an ankle-length or full-length cloak. Formal cloaks often have expensive, colored linings and trimmings such as silk, satin, velvet and fur."])

# ['True']

To use the QNLI model from DeepPavlov Library, you should run the following:

from deeppavlov import build_model

model = build_model('glue_qnli_roberta', download=True, install=True)

model(["What is the minimum required if you want to teach in Canada?"],["In most provinces a second Bachelor's Degree such as a Bachelor of Education is required to become a qualified teacher."])

# ['not_entailment']

The result of the model will be the response entailment or not_entailment. More information about using the DeepPavlov Library is available here.

Conclusion

This article was a short overview of comparison between ChatGPT and DeepPavlov models on the selected QA tasks. Despite the fact that OpenAI’s model is becoming more and more popular, in certain scenarios it’s inferior to existing solutions. As I have shown on one of the most common tasks — Question Answering — it’s more effective to use models trained to solve specialized problems. With them, you can achieve results of much higher quality, and the effectiveness of such models is way ahead of ChatGPT as they work faster and consume less resources. You can find out more about ChatGPT drawbacks in paper.

Sources:

[1] J. Kocoń et. al., ChatGPT: Jack of all trades, master of none (2023), Arxiv publications

[2] C. Qin et. al., Is ChatGPT a General-Purpose Natural Language Processing Task Solver? (2023), Arxiv publications

[3] Q. Zhong et. al., Can ChatGPT Understand Too? A Comparative Study on ChatGPT and Fine-tuned BERT (2023), Arxiv publications

[4] A. Borji et. al., A Categorical Archive of ChatGPT Failures (2023), Arxiv publications