Hello everyone and welcome!

We are happy to share our new DeepPavlov Library releases v0.17.0 & v0.17.1! In these releases we’ve shipped the Relation Extraction and ReCoRD models, as well as the alpha version of the DeepPavlov Python-Naitve Pipelines.

We are anxious to hear your thoughts about these new features!

Library

New Features

Relation Extraction Model

Formally, relation extraction (RE) is a sub-task of information extraction that involves finding and classifying the semantic relations between entities in an unstructured text. The principal practical applications of relation extraction are collecting the database and augmenting the existing ones. An extensive collection of relational triples (i.e., two entities and a relation hold between them) can be later converted into a structured database of facts about the real world of even better quality than manually created ones.

In release v0.17.0 we introduced a new RE model based on the Adaptive Thresholding and Localized Context Pooling. Currently, RE is available for both English and Russian languages. Two core ideas of this model are (logically) Adaptive Threshold and Localized Context Pooling.

You can learn more about supported relations and other things in the official documentation. In the English RE model, the output is Wikidata relation id and English relation name. In the Russian RE model, there is also an additional Russian relation name if it is available.

This model was developed by one of our brave GSoC 2021 students, Anastasiia Sedova. See a blog post about her Summer of Code with DeepPavlov to learn more about her experience of working with us and to learn more about Relation Extraction in DeepPavlov.

A ReCoRD model

Pre- and post-processing, training, evaluation pipelines, relevant metrics, a configuration file, and a pre-trained model for the Reading Comprehension with Commonsense Reasoning Dataset (ReCoRD) were added to the library in release v0.17.0.

In ReCoRD a model is given a passage (generally, a short news article), a masked query (a statement based on the passage with a certain entity hidden), and a list of all entities found in the passage, it is then tasked with identifying such entities that may replace the mask in the query so that the resulting statement is truthful in the context of the passage.

In our implementation we formulate this problem as binary classification of pairs consisting of a passage and a corresponding query where the mask is replaced with a candidate entity. A model effectively evaluates feasibility of a given pair of text fragments. As a result, there’s a pair for every possible entity which is classified independently of all other entities. Naturally, this increases the size of the dataset quite considerably. To deal with this we downsampled negative examples (i.e. incorrect entities for a given query). Accordingly, several pre- and post-processing steps were added.

Introducing DeepPavlov Python-Native Pipelines

In our long path to DeepPavlov v1.0, we envisioned that newcomers will be able to build new DeepPavlov pipelines right within their Python Code without the need to author our JSON config files. For that, we’ve introduced new Model and Element classes that simplify building new DeepPavlov models with Python without configuration files.

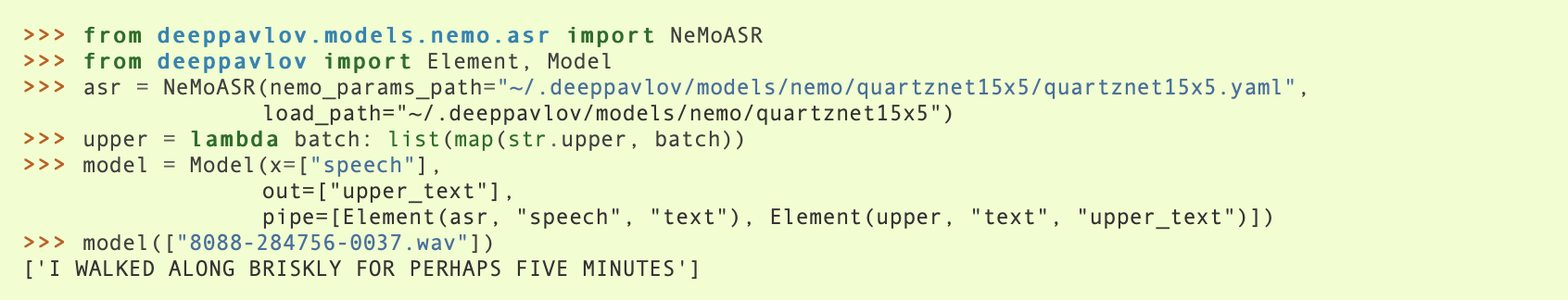

Here’s a very simple example:

Here, we use the power of Python to construct DeepPavlov pipelines that can include both DeepPavlov models and custom code, and then execute within your Python code.

While this example is quite simple one can combine our models and custom methods to solve more sophisticated tasks such as, for example, doing Triplet Extraction from text. It would require a combination of our NER, Entity Linking, and Relation Extraction models to provide an output.

This functionality is very early work and we can’t wait to hear your feedback about it.

To learn more take a look at our tutorial.

We encourage you to begin building your Conversational AI systems with our DeepPavlov Library on Github and let us know what you think! Feel free to test our BERT-based models by using our demo. And keep in mind that we have a dedicated forum, where any questions concerning the framework and the models are welcome.

Follow @deeppavlov on Twitter.